Google AI Models

Google AI Models

Artificial Intelligence (AI) is revolutionizing the way we interact with technology. Google, one of the tech industry leaders, has been at the forefront of developing powerful AI models to solve real-world problems, improve productivity, and enhance user experiences. These AI models are designed to understand, generate, and interact with human language, images, and data across various domains.

What Are Google AI Models?

Google AI models are advanced machine learning systems developed by Google to perform complex tasks such as natural language understanding, image recognition, code generation, and multimodal data analysis. These models are trained on massive datasets and are integrated into Google products like Search, Assistant, Gmail, and Cloud services.

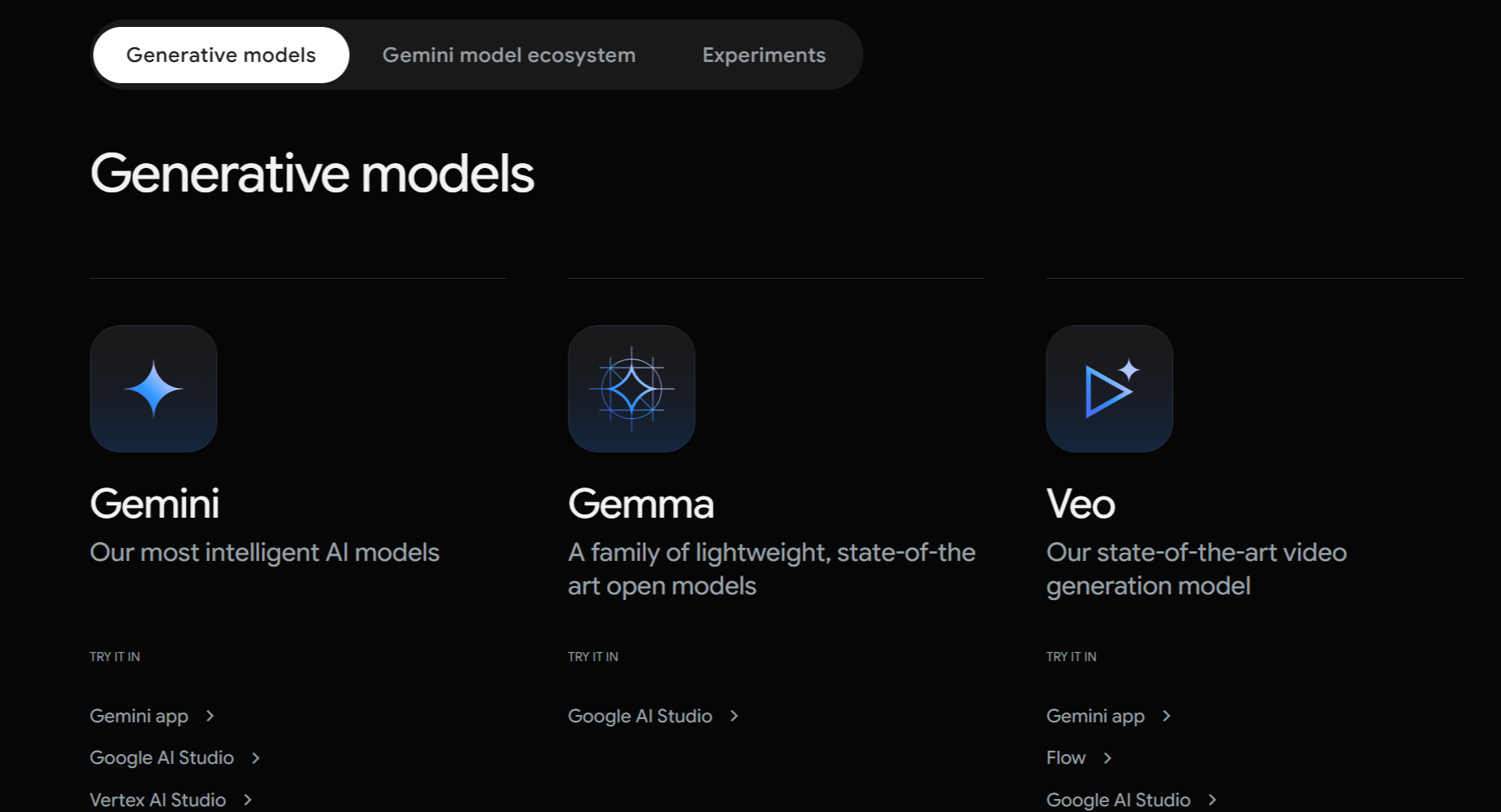

Generative AI Models

Google has been at the forefront of Artificial Intelligence innovation, particularly in Generative AI, which focuses on creating new content—text, images, code, music, and videos—based on prompts. These models help power chatbots, content creation tools, video generators, etc.

| Google AI Model Name | Description | Latest Version |

|---|---|---|

| Gemini | Google’s flagship multimodal large language model (LLM) for text, image, audio, and code understanding. Successor to Bard. Multimodal AI model capable of understanding and generating text, code, images, audio, and video. | Gemini 2.5 (June 2025) |

| Gemma | Lightweight, open-weight models designed for responsible and private AI on personal devices and smaller setups. | Gemma 2 (2025) |

| Veo | AI model for high-quality video generation from text prompts. Designed for filmmakers and content creators. | Veo 2024 Preview |

| PaLM (Pathways Language Model) | Large language model focusing on reasoning, coding, and language understanding. | PaLM 2 |

| Imagen | Text-to-image generation model producing high-quality and photorealistic images from descriptions. | Imagen 2 |

| Chirp | Automatic speech recognition (ASR) model trained on over 100 languages for voice-to-text conversion. | Chirp v1 |

| MusicLM | AI model designed to generate music from text prompts with detailed style and structure. | MusicLM beta |

| Codey | AI model optimized for code generation, explanation, and completion, built on PaLM. | Codey v2 |

Gemini

Gemini is Google DeepMind’s most advanced AI model designed to rival OpenAI’s GPT-4. It supports multimodal inputs—meaning it can understand and generate content from text, images, audio, and code.

Benefits:

- Multimodal understanding (e.g., image + text + audio)

- Strong reasoning and logic skills

- Integrated with Google Workspace (Docs, Gmail, etc.)

- Supports long context windows (1M+ tokens in Gemini 1.5 Pro)

Gemma

Gemma is a family of small, efficient, open-source models, based on the same research as Gemini but optimized for local and responsible use.

Benefits:

- Open-weight and developer-friendly

- Ideal for on-device and edge applications

- Fine-tunable on private datasets

- Great for academic, enterprise, and individual AI projects

Veo

Veo is Google’s video generation model, similar to OpenAI’s Sora. It generates high-quality, cinematic videos from text prompts.

Benefits:

- Generates 1080p videos

- Supports motion, object consistency, camera movements

- Useful for content creators, educators, and film directors

- Accepts both simple prompts and complex storyboard-style inputs

PaLM

PaLM stands for Pathways Language Model and focuses on language comprehension, reasoning, and code-related tasks. It’s widely used in applications like Google Bard and helps improve natural language understanding in Google products.

Imagen

Imagen is a text-to-image AI model capable of generating realistic images from simple prompts. It is known for producing photorealistic results and is useful for creative content generation, design, and education.

Chirp

Chirp is a speech recognition model that converts spoken language into text. It supports over 100 languages and enhances accessibility tools, transcription services, and voice assistants.

MusicLM

MusicLM generates music tracks from text inputs. It can understand detailed instructions about mood, instruments, and tempo, making it a powerful tool for musicians, composers, and content creators.

Codey

Codey is designed for developers and can generate, explain, and autocomplete code. It’s integrated into Google’s AI Studio and helps streamline software development, debugging, and code learning.

Benefits

- Enhance productivity across content creation, coding, and data analysis.

- Enable natural and efficient human-computer interaction.

- Improve accessibility through speech and language processing.

- Power creative applications in art, music, and design.

- Accelerate innovation across industries like healthcare, education, and engineering.

Google continues to lead the AI space with these powerful models, constantly updating and refining them to serve both businesses and consumers. Understanding these models is the first step toward embracing the AI-powered future.

Google’s generative AI ecosystem is rapidly expanding:

- Gemini powers general intelligence tasks across all media.

- Gemma brings AI capabilities to smaller, private environments.

- Veo revolutionizes how we generate and visualize video content.

These models are being integrated across Google products and are increasingly available through Google Cloud, Vertex AI, and open-source platforms.