Qwen AI Models

Qwen AI Models

Qwen is a family of large language models (LLMs) developed by Alibaba Cloud (the cloud computing arm of Alibaba Group). It is designed to compete with other top-tier AI models like OpenAI’s GPT, Anthropic’s Claude, and Meta’s LLaMA.

The name Qwen comes from “Tongyi Qianwen”, which roughly means “seeking answers to a thousand questions” in Chinese.

Latest Models

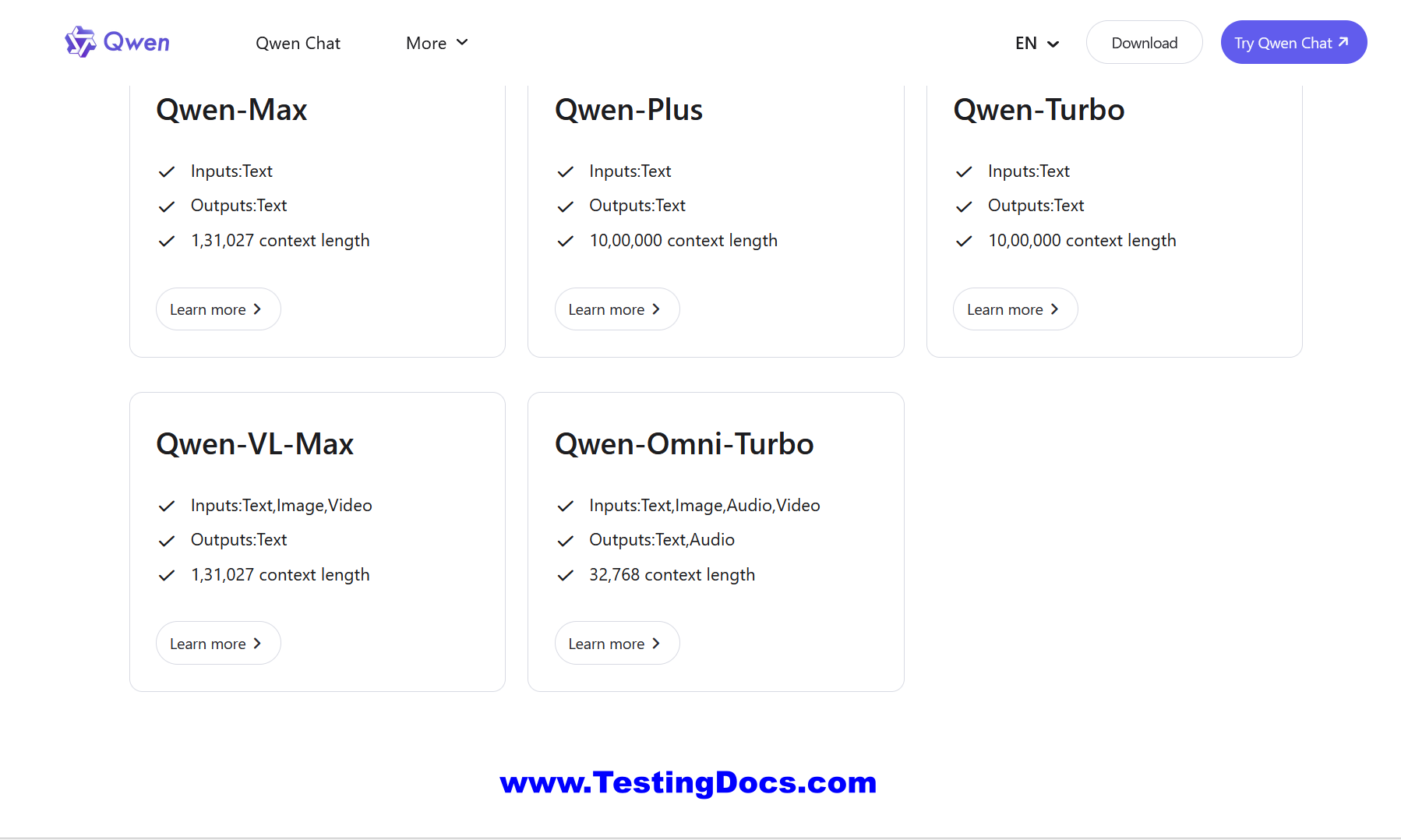

Qwen offers a family of powerful AI models designed for various use cases. This guide introduces you to the flagship Qwen models in simple terms—what they do, what they accept as input, what they produce as output, and how much text they can handle at once.

The latest flagship models are as follows:

Qwen-Max

Qwen-Max is the most powerful and versatile model in the Qwen lineup. It’s ideal for complex, multi-step tasks that require deep reasoning and high accuracy.

- Input: Text prompts, including instructions, questions, or multi-turn conversations.

- Output: Detailed, high-quality text responses such as explanations, summaries, code, or creative writing.

- Context Length: Supports up to 1,31,027 tokens, allowing it to process and remember long documents or conversations.

Qwen-Plus

Qwen-Plus strikes a balance between performance and cost. It’s great for moderately complex tasks where you need solid reasoning but don’t require the full power of Qwen-Max.

- Input: Text-based prompts, including multi-turn dialogues and structured queries.

- Output: Clear and coherent responses suitable for customer support, content generation, and data analysis.

- Context Length: Handles up to 10,00,000 tokens, making it capable of managing lengthy inputs.

Qwen-Turbo

Qwen-Turbo is the speed-optimized model in the family. It delivers fast responses at a lower cost, making it perfect for simple, high-volume tasks.

- Input: Short to medium-length text prompts, such as quick questions or basic commands.

- Output: Fast, concise answers—ideal for chatbots, real-time assistance, or lightweight automation.

- Context Length: Supports up to 10,00,000 tokens, ensuring it can still manage reasonably long conversations or documents despite its speed focus.

Qwen-VL-Max

Qwen-VL-Max is a multimodal model, meaning it understands both text and images. It’s designed for tasks that involve visual content alongside language.

- Input: A combination of text, images and video —such as a photo with a question about its contents.

- Output: Textual responses that interpret, describe, or reason about the visual input (e.g., “The image shows a red apple on a wooden table.”).

- Context Length: Supports up to 1,31,027 tokens for text, plus the ability to process high-resolution images.

Qwen-Omni-Turbo

Qwen-Omni-Turbo is a fast and efficient multimodal model. It combines the speed of Qwen-Turbo with basic visual understanding capabilities.

- Input: Text, image, audio, video inputs, optimized for quick visual queries or simple image-based interactions.

- Output: Rapid, straightforward responses that connect visual and textual information.

- Context Length: Supports up to 32,768 tokens for text, along with efficient image processing for real-time applications.

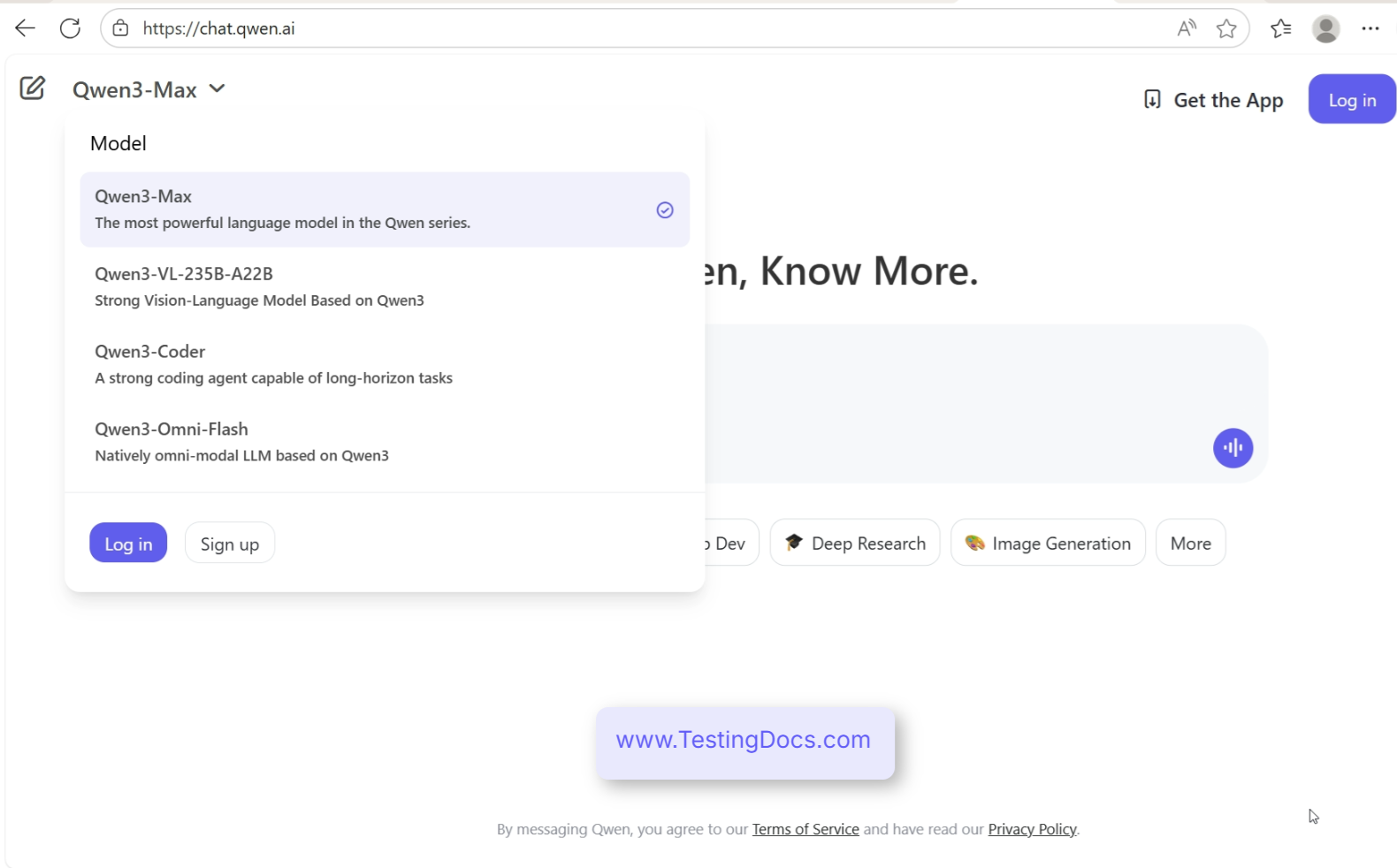

| Model | Description |

|---|---|

| Qwen3-Max | The most powerful and versatile model in the Qwen3 series, designed for complex, multi-step tasks requiring high reasoning capabilities, extensive knowledge, and strong language understanding. Ideal for demanding applications such as advanced research, strategic analysis, and comprehensive content generation. |

| Qwen3-Coder | A specialized variant optimized for programming and software development tasks. Excels at code generation, debugging, code explanation, and supporting multiple programming languages. Tailored for developers seeking intelligent coding assistance and automation. |

| Qwen3-Omni-Flash | A fast and efficient model engineered for high-speed inference and real-time applications. Balances performance and accuracy to deliver rapid responses with low latency, making it suitable for chatbots, live customer support, and time-sensitive interactive systems. |