GPT Temperature Setting

🎚️GPT Temperature Setting

The GPT Temperature setting is an important setting that significantly impacts the model’s response. It controls the amount of randomness in the output and can be adjusted to achieve different results.

This setting implies the level of randomness in the model’s response. For example, as the setting limits to zero, the model will become repetitive and deterministic.

We specify the setting in the UI or the API request.

UI

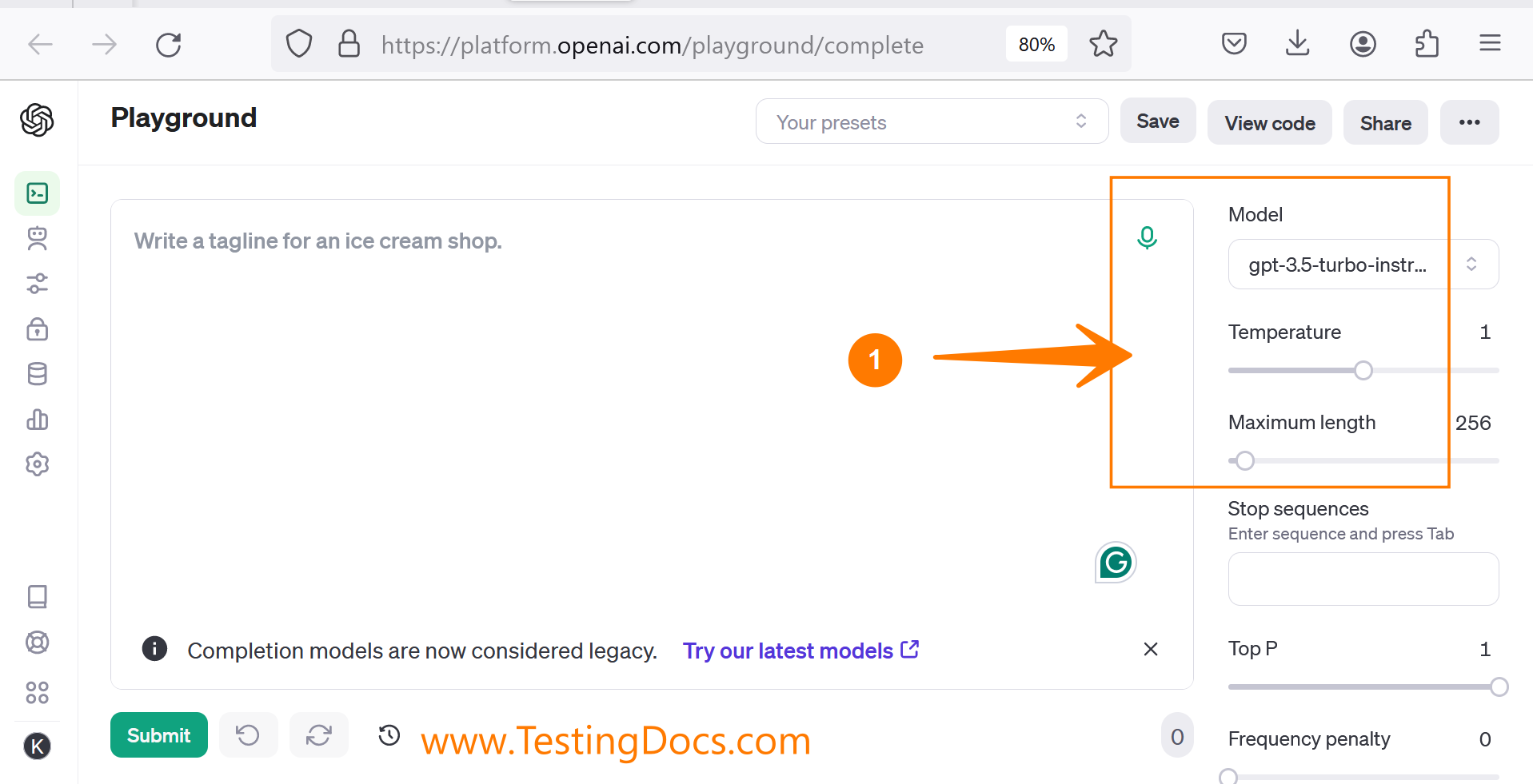

Adjust the Temperature Setting

1.0 is the default Temperature value. The default value works well for most use cases. However, you can modify the temperature. Move the slider to adjust the setting.

🤖 Sample API Request

Sample API request using OpenAI Python SDK:

from openai import OpenAI

client = OpenAI()

response = client.completions.create(

model=”gpt-3.5-turbo-instruct”,

prompt=”TestingDocs prompt”,

temperature=1,

max_tokens=256,

top_p=1,

frequency_penalty=0,

presence_penalty=0

)

Notice that we have set the temperature to 1.0 in the API request.

⬇️Lower Temperature

At lower temperatures, GPT tends to choose words with a higher probability of occurrence, which can be helpful when you want the system to fill in the blank sentences or questions with a single correct answer.

⬆️Higher Temperature

At higher temperatures, GPT will tend to produce words with greater variety. This can be used to generate ideas, tell stories, etc.

| GPT Temperature | Model Response |

| Low Temperature | Precise |

| Medium Temperature | Balanced |

| High Temperature | Creative/Imaginative |

Lower temperature values produce more predictable and precise responses, while higher temperatures produce more creative or imaginative responses.