Overview

Nucleus sampling is also known as Top_p Sampling. In Nucleus sampling, the model only considers the tokens with the highest probability mass, which is determined by the top_p parameter. OpenAI recommends to use either one but not both:

- nucleus sampling or

- temperature sampling

Top_p Sampling

The Top_P parameter controls the diversity of the text generated by the GPT model. This parameter sets a threshold such that only words with probabilities greater than or equal to the threshold will be included in the response. To understand Top_p sampling, we need to understand two things.

- Probability Distribution

- Cumulative Distribution

Probability Distribution

After processing input tokens, the GPT model predicts the next token by assigning probabilities to all possible next tokens in its vocabulary. This results in a probability distribution over the vocabulary.

Cumulative Distribution

The probabilities are sorted in descending order, and a cumulative sum is calculated. This process identifies the smallest set of tokens whose cumulative probability exceeds a threshold p. The top_p gets its name from the threshold p, which represents the percentage of the total population or sample being considered.

Example

For example, a value of 0.9 means that only the tokens with the top 90% probability mass are considered. i.e. the set includes the smallest number of most probable tokens that together account for 90% of the total probability.

The next token is randomly sampled from this set rather than from the entire vocabulary or the top-k most likely words. This method ensures that the tokens chosen are both likely and diverse.

Likely: Since they’re part of the cumulative probability exceeding the threshold p

Diverse: Since even less likely words have a chance to be selected, as long as they’re within the top-p set.

Adjust the value

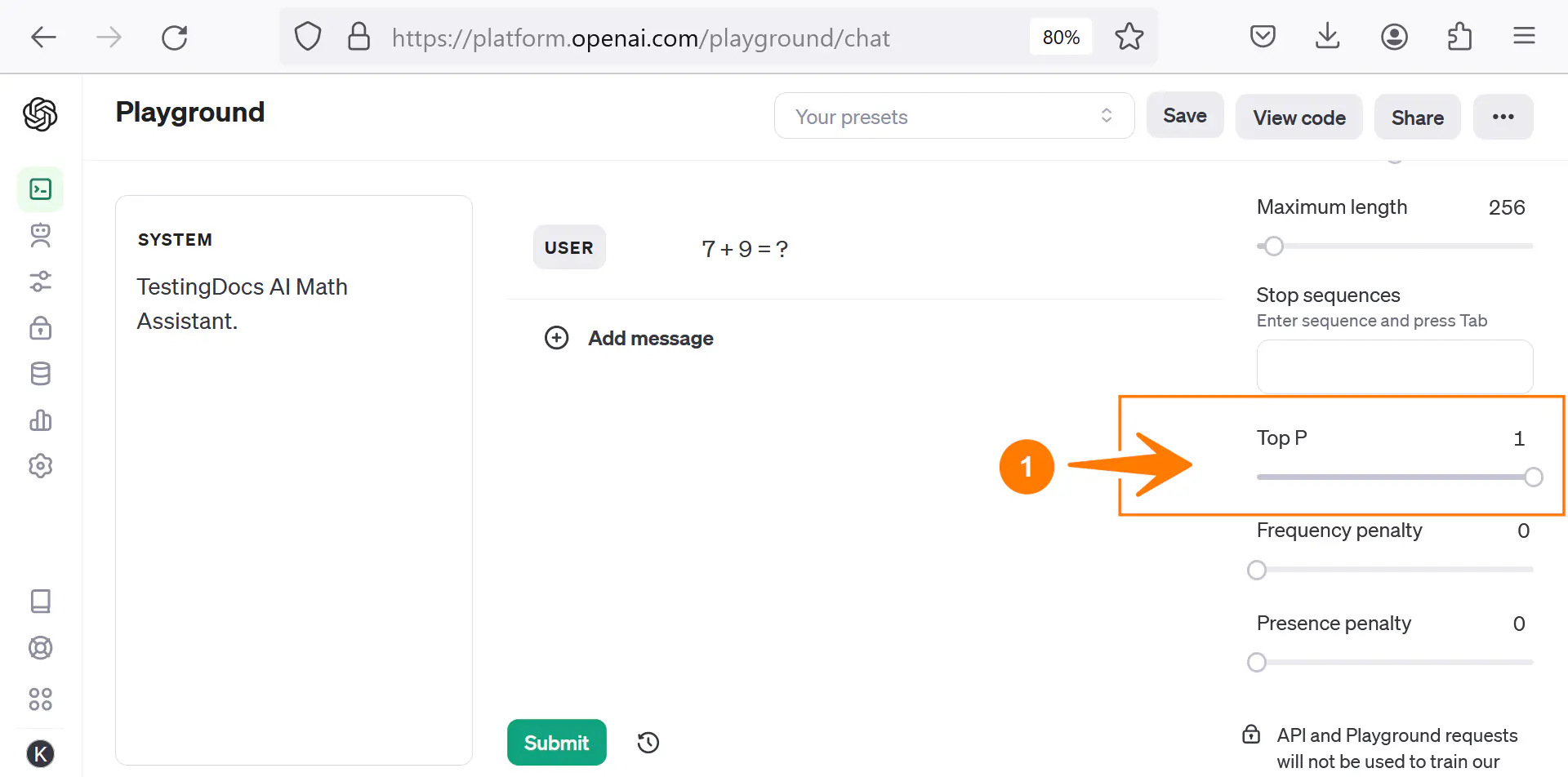

We can adjust the top_p value in API requests or the Playground UI

while interacting with the model.

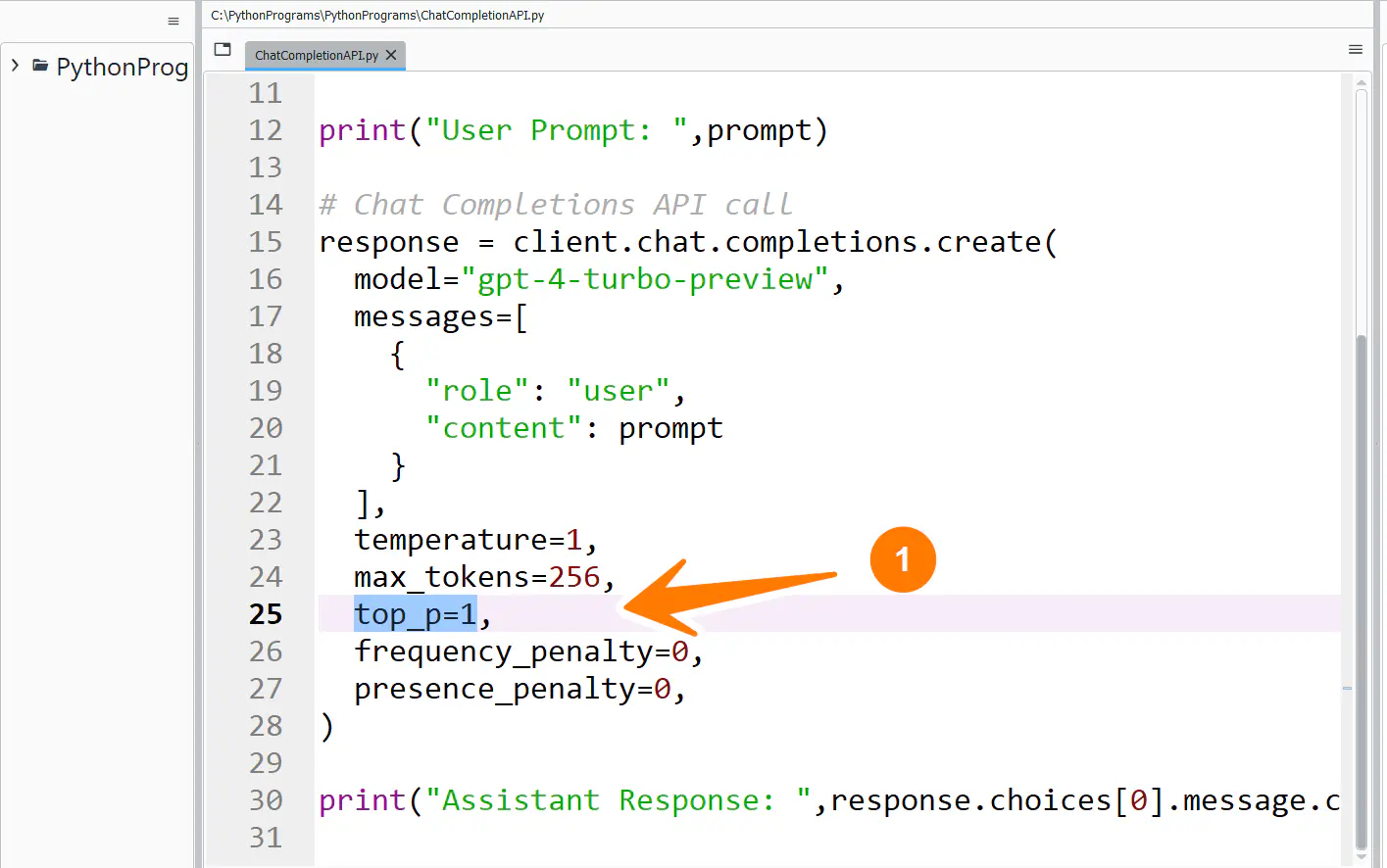

API Request

The general syntax for setting the value in API requests in JSON format is as follows:

top_p=<value>,

The top_p value ranges from 0 to 1, both inclusive.

The top_p parameter allows us to control the model’s output. If we set a low value, the model will tend to generate more diverse output text, and with a higher value, it will tend to generate conservative text.

—

OpenAI API Tutorials

OpenAI tutorials on this website can be found at:

For more information on the OPenAI AI Models, visit the official website at: