Few-shot Prompting

Overview

Few-shot prompting is a technique that involves providing a model with a small number of examples or prompts to perform a specific task or generate output.

This technique is used in NLP (Natural Language Processing) in the context of few-shot learning. LLMs like GPT-3 (Generative Pre-trained Transformer 3) have demonstrated impressive few-shot learning capabilities. The few-shot prompting technique helps the LLM model perform better by guiding it with examples.

Few-shot Prompting

Few-shot prompting has been applied in various NLP tasks, including text classification, generation, question answering, and language translation. Compared to traditional supervised learning approaches, it requires less labeled data and training time, making it particularly useful in settings where data is scarce or rapidly changing.

We can use this technique when we want the LLM to summarize news articles; we can provide a few examples of article summaries along with the corresponding articles. The model can then use these examples to generate summaries for new articles it encounters.

Examples

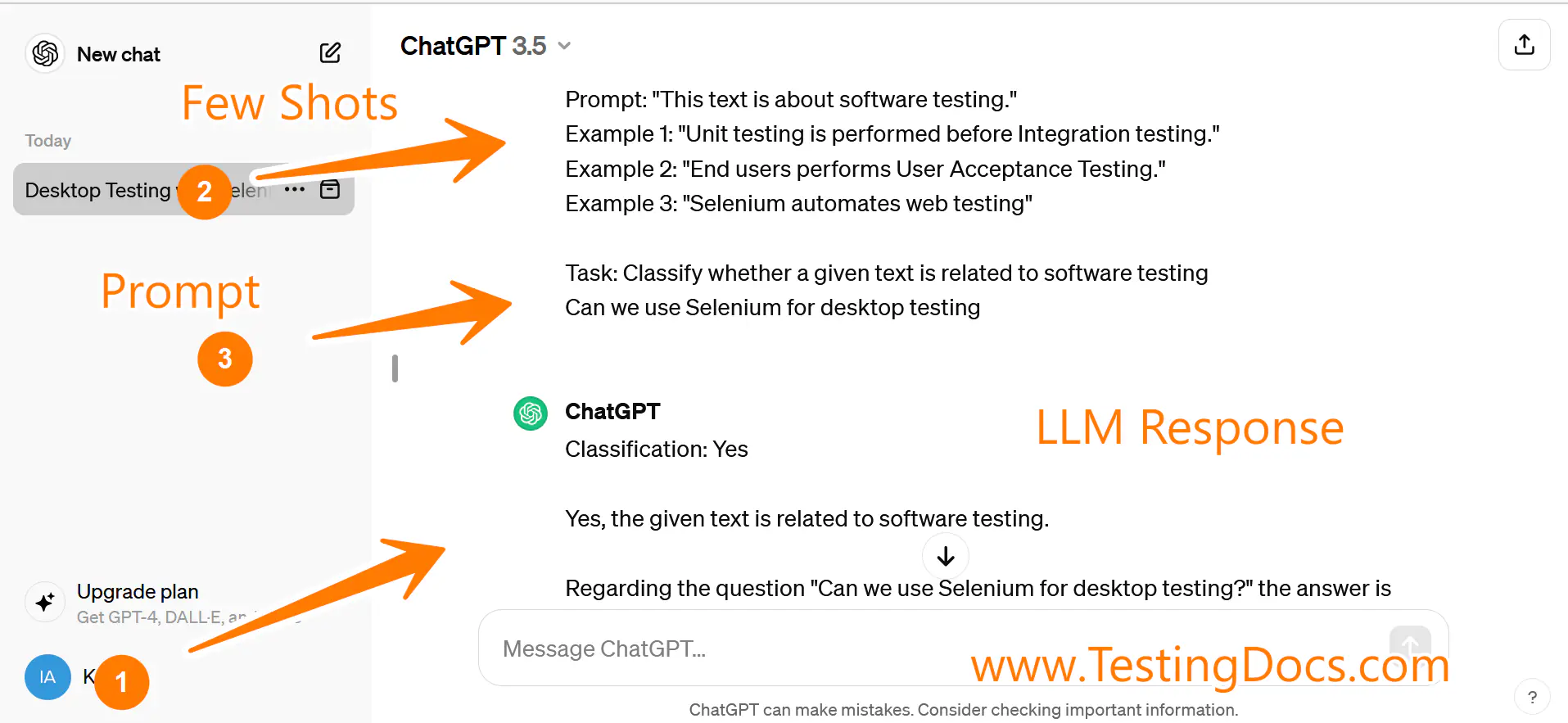

Let’s run some examples on ChatGPT:

Run this few-shot user prompt on the LLM and see its response.

#1

Prompt: “This text is about software testing.”

Example 1: “Unit testing is performed before Integration testing.”

Example 2: “End users perform User Acceptance Testing.”

Example 3: “Selenium automates web testing”

Task: Classify whether a given text is related to software testing

Can we use Selenium for desktop testing

#2

Run the following few-shot user prompt and observe the model response.

Prompt: “This text is about software testing.”

Example 1: “Unit testing is performed before Integration testing.”

Example 2: “End user performs User Acceptance Testing.”

Example 3: “Selenium automates web testing”

Task: Classify whether a given text is related to software testing

The nature is calm before it rains