Zero-shot Prompting

Overview

In this tutorial, we will learn about the Zero-shot prompting technique. In this technique, we provide the model with a prompt that is not included in the training set. This technique allows the model to predict the desired outcome without examples.

Zero-shot prompting

Zero-shot prompting involves providing a prompt to a language model without any prior fine-tuning or specific training on that prompt.

Example

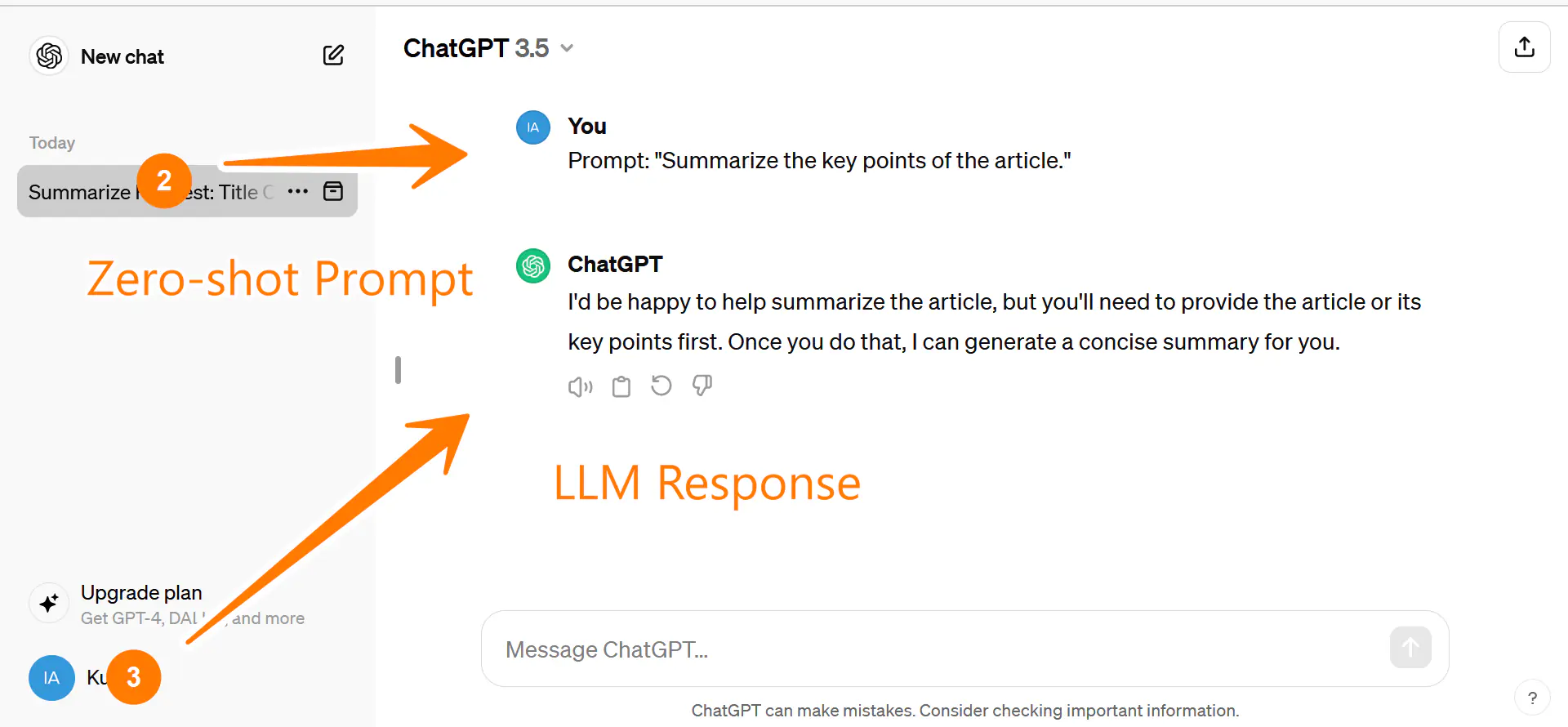

Try this on the ChatGPT model:

Launch GPT, issue the following zero-shot prompt and observe the language model’s response.

Prompt: “Summarize the key points of the article.”

A typical response would be:

LLMs might not perform well for this prompting technique. Zero-shot summarization involves providing this prompt to the language model and expecting it to generate a summary of the article without prior knowledge of the specific article and its content.

In the following tutorial, we will discuss Few-shot prompting. In this technique, we provide a few examples along with the prompt.

—

More information on OpenAI LLMs