Large Language Models

Large Language Models

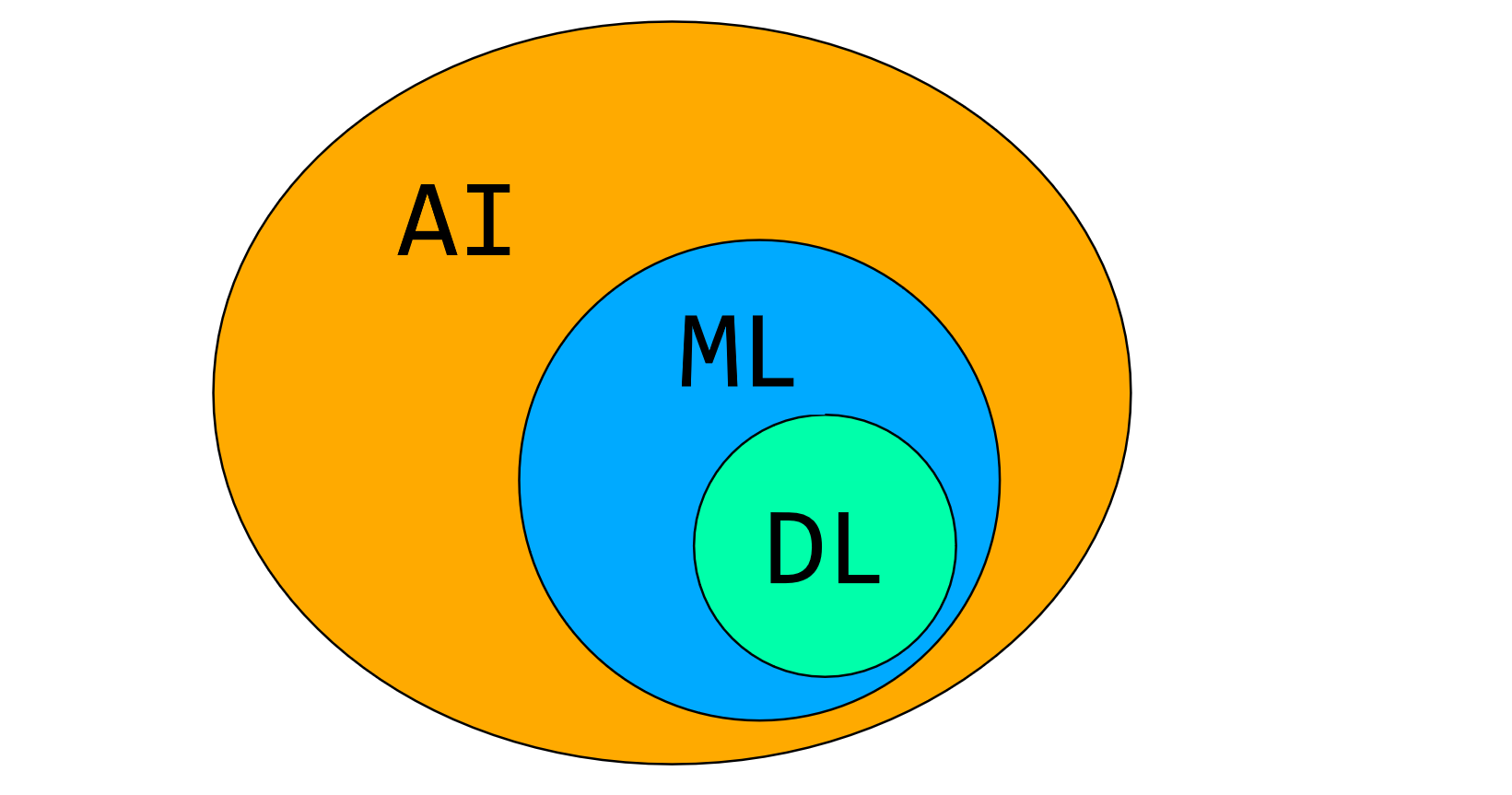

Large Language Models use advanced Machine Learning algorithms called Deep Learning, and they are particularly known for their ability to generate coherent and contextually relevant text based on the input they receive.

AI – Artificial Intelligence (AI) is the technique of simulating intelligent and behavioral patterns of living beings, particularly humans.

ML – Machine Learning is a technique that enables computers to learn from data. It involves using algorithms and statistical models that allow machines to improve their performance on a specific task by learning from data without being explicitly programmed to do so. This approach involves training a model on datasets without relying on complex rules.

DL – Deep learning is an advanced machine learning technique that utilizes neural networks to process complex data and extract meaningful insights. It is a powerful tool for training computers to recognize patterns and make intelligent decisions.

Large Language Models

Large Language Models(LLMs) are advanced Artificial Intelligence(AI) systems that use neural networks to process large amounts of natural language data. LLMs are trained with vast amounts of data to help generate responses to user queries by understanding the context and intent of the user’s prompts and generating relevant responses.

They are called “Large” because they are trained on vast amounts of data and have many parameters that allow them to capture the nuances of natural language.

Examples

Some of the examples of LLMs are as follows:

- GPT

- BERT

GPT

GPT (Generative Pretrained Transformer) developed by OpenAI, GPT is a series of models like GPT-1, GPT-2, GPT-3, and GPT-4. They are known for their ability to generate coherent and contextually relevant text based on their given prompts.

BERT

BERT(Bidirectional Encoder Representations from Transformers), created by Google, is designed to understand the context of a word in search queries, making it possible to understand the intent behind the search.

Use cases for LLMs

LLMs are trained on vast amounts of data to recognize patterns in language, allowing them to complete text prompts, answer questions, translate languages, and create content. Some of the use cases for LLMS are as follows:

- Customer service

- Code generation

- Content generation

- Language Translation

- Data Analysis

- Marketing & Advertising

Customer service

LLMs are used to power chatbots and virtual assistants, providing human-like responses in customer service, technical support, and personal assistance.

Code generation

Developers use LLMs to write or create code, review and debug code, and even to generate code snippets from natural language descriptions.

Content generation

Writers and content creators use LLMs to generate ideas, create drafts, write articles, poems, stories, etc.

Language Translation

LLMs can translate text between various languages, making them useful in global communication and translating documents and websites.

Data Analysis

LLMs help analyze qualitative data, extract insights, and make predictions based on textual data.

Marketing & Advertising

LLMs can generate creative content for marketing campaigns and marketing memos. They can analyze consumer sentiment from social media or reviews.

The technology behind LLMs has advanced rapidly in recent years, improving their ability to understand and generate accurate responses.